Web Scraping Legal Context

As the need for data grows, so does the need for clearer regulation

About Data Boutique

Data Boutique is a web-scraped data marketplace. If you’re looking for web data, there is a high chance someone is already collecting it. Data Boutique makes it easy and safe to buy data from them.

What is the legal context for Web Scraping?

Me and my long-time business partner Pierluigi Vinciguerra have been in the web scraped-data business for quite a while now. We have been collecting and selling data to some of the largest companies in Asia, Europe, the UK, and the USA, from real estate, hedge funds, consumer electronics, fashion, and luxury.

We have always paid great attention to its legality, and if you are a web data user or are involved in web scraping, you should care too.

While web scraping is as old as the Internet itself, it has never found a proper place in the legislation of many countries (here is a recent post by Quinn Emanuel legal firm for the US market).

Although not given a dedicated space by lawmakers, it still is so wi(l)dely used, that some high-level conclusions can be drawn.

Self-guidance organisms

Professional investors, such as hedge funds, were among the first to adopt some form of self-guidance for web scraping, as they operate in an SEC-regulated market, with a specific accent on Material-Nonpublic Information (MNPI) and the risks of insider trading.

These self-guidance organizations are the Investment Data Standard Organization IDSO and the Alternative Data Council of FISD. They have done and still do an excellent job in shedding light on a very complex and sometimes very technical subject. Please comment if you know of other organizations, especially in other industries.

The rise of genAI and the need for web data

The recent surge in genAI web data demand mixed with the “move-fast, break things” philosophy of Silicon Valley, drove a rise of lawsuits hitting companies of any size.

Just as a reminder, here is a non-exhaustive list of the three most recent lawsuits related to web scraping that come to mind:

September 2023, a class action was filed against Microsoft for how ChatGPT used privacy data

July 2023, Google hit with class-action lawsuit over AI data scraping

June 2023, Lawsuit says OpenAI violated US authors' copyrights to train AI chatbot

Now, as the mission of Data Boutique is to promote the safe and fair use of web-scraped data, we will use this post to try to define the high-level context. We hope this will help someone out there.

Web Scraping Legal Context

General advice: We are not lawyers; don’t take our word as pure gold, and speak to your legal counselor if you have questions on web scraping. Data regulation changes materially by geography and over time. We do our best to provide helpful information, but this is our own interpretation and might be inaccurate.

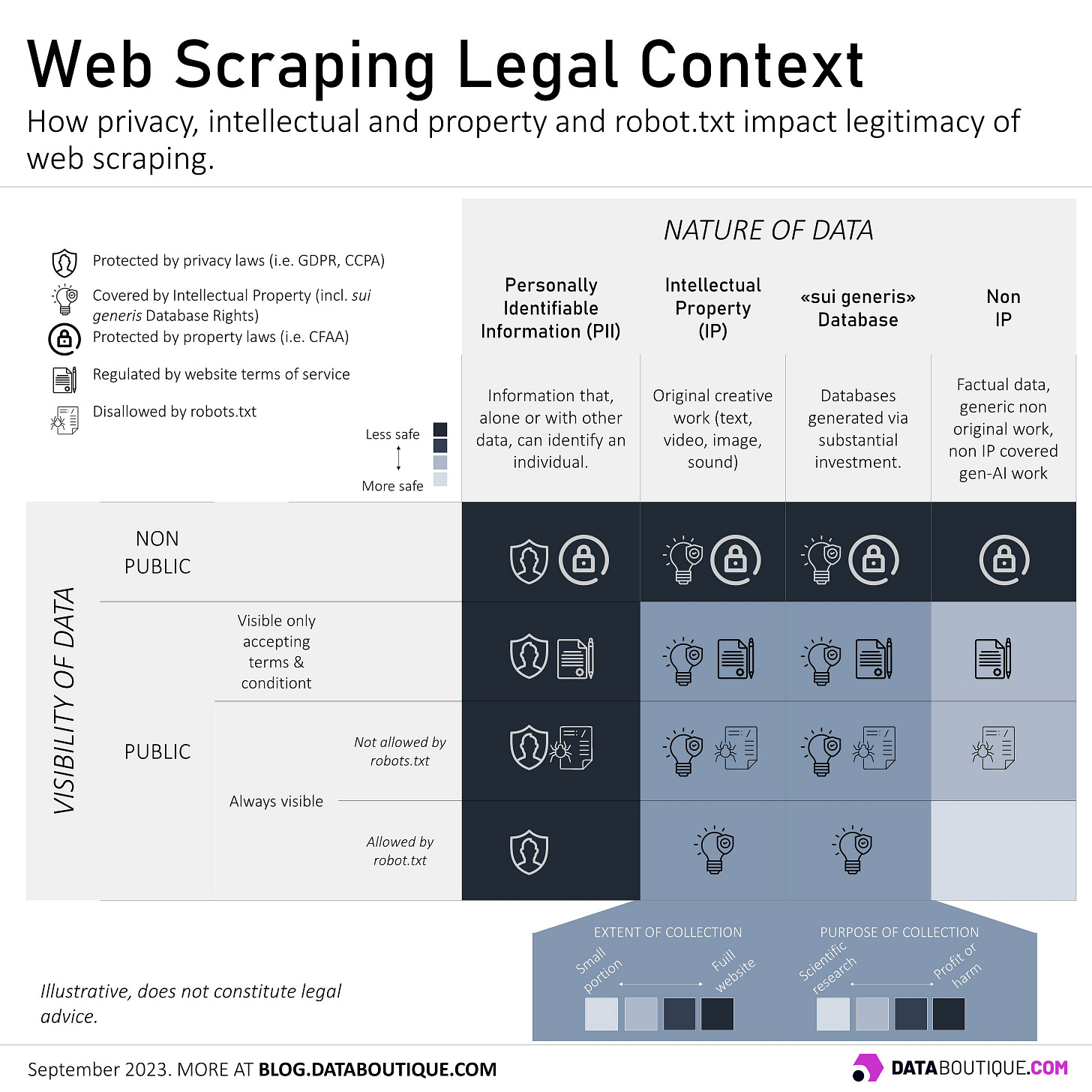

When it comes to web scraping, there are two elements we need to consider:

The nature of data, which impacts our right to buy, use, transform, and sell it

The visibility of data, which strictly regards the act of collecting, if it is in breach of property laws or existing contracts or agreements

1. The nature of the data collected

The main attention of regulators and lawmakers is toward protecting citizen’s privacy and copyright holders’ interests. There are three different types of protected data:

Personal Data

Intellectual Property

“Sui generis” Database

Personal Data

Personally Identifiable Information (PII) is information that, when used alone or with other data, can help identify an individual. Privacy laws protect the rights of citizens, like the European General Data Protection Regulation (GDPR) or the California Consumer Privacy Act (CCPA).

If you collect an individual's name from LinkedIn, for example, without her/his consent, you are very likely violating something. So, as a rule of thumb, don’t.

Intellectual Property

Intellectual Property (IP) is content that is the result of human creativity, from images to audio, to written content, computer code, and so on. Humanity has used variations of this concept since 1474, and it is very well protected from a legal standpoint.

To be considered Intellectual Property, the content needs to be original and have a form of uniqueness. A long text form, like a NYT article, fits the definition. A generic text like “Blue T-shirt” does not.

In general, in order to use, transform, or sell intellectual property, you need to have IP owner’s consent.

There are exceptions, like the fair use of copyrighted material and the debate to extend it also for training AI (allowing copyrighted material to be used by AI even without the consent of the owner). More exceptions to the use of copyrighted material exist around the world, and the situation is always evolving.

“Sui Generis” Database

Sui Generis Property Right (or Database Right) is a form of protection of databases, regardless of their originality, that required great effort to be built. If there was a substantial investment, either financial or of work, in creating, validating, and presenting the database itself, the law protects it. It exists in the EU, the UK and other countries, but - as of today, not in the USA.

There are exceptions to the collection and use of copyrighted information related to the purpose of the data collection (academia and scientific research can) or the extent of the scraping (scraping a little is tolerated, scraping the whole website, not).

What is not covered?

If web scraping for personally identifiable information (PII), IP-protected data o EU databases exposes to risks, non PII and non IP protected, does less so. This is where it gets interesting (and yes, debated).

When is content not covered by IP? When is a database not sui generis covered?

Are prices and timetables of an airline covered by some form of IP? This 2015 European Court of Justice ruling stated that Ryanair flight prices and timetables did not constitute IP content (prices and timetables are factual measures, which do not have the intrinsic element of originality) and the database did not have the right to be covered under EU Database legislation. Web scrapers can still be sued for breaking website terms and conditions.

Are ChatGPT texts, or Midjourney images, protected by copyright? A federal judge ruled that artificial intelligence or AI-generated content can’t be copyrighted. If this was also confirmed by other rulings and geographies, entire AI-generated websites could not have their content protected.

These two points mark an interesting line for web scraping. As long as it doesn’t violate the law, these data seem legit to collect.

2. The visibility of data

Data on the internet can be accessed in many ways, some more legal than others.

It is easy to understand that violating computer systems to access information is different than accessing the information available to anyone with no particular technical skill.

Non-public information

Any internal website, company portal, or anything that prevents an outsider from seeing the information, is non-public. Only employees of a company see it, if it’s information that is reserved to a single individual (like a bank account) and needs user’s credentials to access, is non-public.

If the web scraped data has the consent of the user, and the conditions for this consent are met, then scraping is possible. But if some of these elements fail, web scraping is very risky. A basic rule of thumb: If you need to hack it to see it, don’t scrape it.

Public information

When anyone with an internet connection can see the information, that is public.

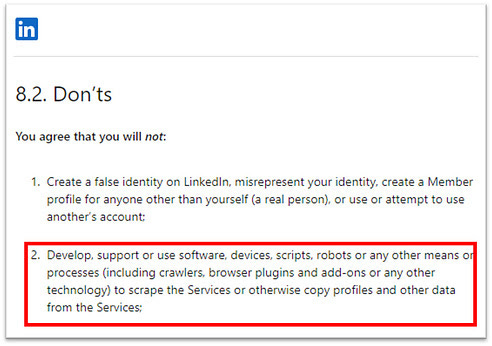

Some websites, even if public, are required to agree to terms and conditions to see content. If you don’t agree, you get no access.

It is highly possible that these terms prohibit web scraping (see LinkedIn example here).

According to self-guidance organizations mentioned above, only Terms and Conditions that require explicit acceptance by users (click-wrap ToS) are valid, while generic footnotes (browse-wrap ToS) do not provide sufficient ground for enforcement.

Robots.txt

This is probably the most relevant element for web scraping activities: A little file called robots.txt under www.websitename.com/robots.txt is the explicit indication from websites to robots.

This little file, optionally present in many websites, tells robots where they are allowed to crawl. This is not the law. It’s a “de facto standard”, born in 1994. It’s a way the website owner is telling bots where to go.

For example, the Farfetch.com file https://www.farfetch.com/robots.txt contains this line:

Allow: /it/*?*lang=it-ITWhich tells bots they are allowed to crawl the www.farfetch.com/it/ part of the website.

How can a data buyer know?

Assessing if web scraped data violates IP, database laws, robots.txt files can be quite exhausting. This is why we promote safe access to data.

Web scraping is only a tool, just like a hammer. You can use the hammer to build useful things like a bridge or a house, or use it fraudulently to smash a car’s window and steal from it. Understanding the difference defines how we interact with society.

We do our best to make take web scraping - at the foundation of so many technologies or websites we know or use - come out of its “Wild West moment”.

Web data is everywhere. We need to start treating it and trading it properly.

About the Project

That was it for this week!

Data Boutique is a community for sustainable, ethical, high-quality web data exchanges. You can browse the current catalog and add your request if a website is not listed. Saving datasets to your interest list will allow sellers to correctly size the demand for datasets and onboard the platform.

More on this project can be found on our Discord channels.

Thanks for reading and sharing this.