How Ground Truth Testing Can Help Web Data

A Web Data QA approach on DataBouqitue.com

About Data Boutique

Data Boutique is a web-scraped data marketplace.

If you’re looking for web data, there is a high chance someone is already collecting it. Data Boutique makes it easier to buy web data from them.

Join our Discord channels to learn and interact about this project:

Data Quality: A Major Issue in Web Data

Data (bad) quality is an issue with all kinds of data. Quality Assurance (QA) represents a significant portion of the effort of data projects. No data source is immune.

But Web Data is more exposed to it: Users can verify the original website with no effort (at least a portion of it) and immediately spot inconsistencies, undermining users’ trust very rapidly.

We call this the Ground Truth Test exposure.

What Are Ground Truth Tests (GTT)

According to Wikipedia

Ground truth is information that is known to be real or true, provided by direct observation and measurement (i.e. empirical evidence)

In web data, Ground Truth is the information the user can see visually on the website.

Let’s assume we are buying a dataset with all Airbnb listings in Europe available to book on a given day. How do we know it really contains all listings? How do we know we are buying a complete extraction? We can’t manually count all Airbnb listings in Europe (and be sure we didn’t make mistakes ourselves). All we can do is analyze a small sample:

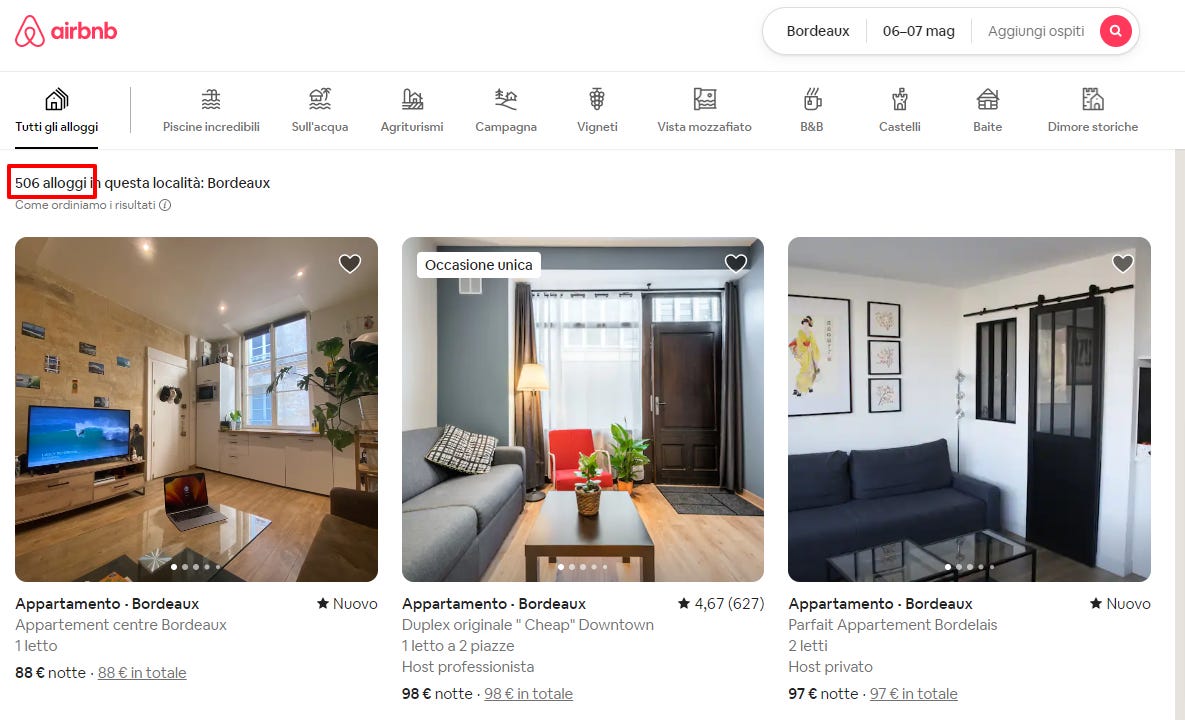

We can go to the website, make a search, and see there are 506 available listings in Bordeaux, France, for May 6th through May 7th (as researched on April 23rd).

If the dataset also shows 506 listings for Bordeaux, we trust the data and buy it.

Users’ GTT Will Likely Fail

The issue with this approach is that the test will likely fail. In fact, there are several fallacies in this process:

Airbnb listings also include nearby locations (the expected number is wrong)

The day of the test is different from the day the data was collected (the expected number is wrong)

If ever the data provider did a test, it was likely on a different sample. All potential issues referred to the test case never came up (the dataset is also wrong, but the seller was unaware of it).

The problem is relevant: How do we ever trust any dataset in this scenario?

A Structured Approach to GTT

Ground Truth Tests (GTT) are not just the only option users have: They are also easy to do and plain to understand: All the building elements of trust. If we structure a process to handle this, we will unlock web data to grow further.

At DataBoutique, we reinforced domain-quality controls and time-series consistency controls, with a GTT process that follows these principles:

S.M.A.R.T. Metrics: (our own version of it)

Specific, GTT cases are defined unambiguously: A set of filters that must be applied on the website, such as “number of Balenciaga products on Farfetch.com in Italy”, “number of job listings for Software Engineer in Zurich on Indeed.com”;

Measurable, as they result in an exact number, like product or item counts. This number is captured through a data-entry form online, stored, and used as a benchmark vs. the comparable metric of the web-collected dataset;

Achievable, any user, regardless of their technical expertise, must be able to perform the test. No coding or data science skills are required. If the website does not present an explicit count of items, the manual counting must be a reasonable number;

Relevant, as tests must be a relevant representation of the website, cover different cases, and allow fair (but achievable) coverage;

Time-bound, given the volatility of websites, the metrics have a time-based validity; when they expire, they must be refreshed.

Shared and Agreed Upon: Users on the platform agree that data validation will happen through these tests. This way, the sellers can diagnose their data upstream, and the buyers can shorten their adoption process once they accept (and trust) the tests already in place.

Collaborative: All DataBoutique accredited users can update and peer-review the GTT entries. A consensus-like mechanism prevents the process from misuse and economically rewards collaboration. This reward-based system allows good coverage of test cases and a scalable mechanism to cover thousands of websites.

The implementation workflow is quite linear:

An initial GTT case is submitted upon dataset onboarding. A simple data-entry mask is present on each website data structure, and any accredited user can run the test and enter the expected values.

GTT cases are updated in two moments: Upon expiry and each time a dataset fails a test (since website counts can vary rapidly). When a GTT is updated, and the dataset still fails, data submission is refused.

The final customer receives only data that passed the tests.

When a dataset has no valid GTT cases, it cannot be published. The consensus mechanism also puts a limit (i.e. max 70%) to the number of GTT cases submitted by the seller of that specific dataset.

The seller has a strong incentive to update the GTT case fast (the sooner it’s updated, the sooner the data passes the test). The community of users, whose incentive is based on total platform revenue, has a more extended timeframe incentive to continuously update and add tests.

The benefits of GTT in Data Boutique

Increased number of tests performed on data

Increased quality of onboarded data, as sellers had more tools to self-diagnose their extractions.

Reduction of disputes upon misleading expectations from the end user

(our goal:) Increased trust in buyers, which translates into more purchases.

Join the Project

Data Boutique is a community for sustainable, ethical, high-quality web data exchanges. You can browse the current catalog and add your request if a website is not listed. Saving datasets to your interest list will allow sellers to correctly size the demand for datasets and onboard the platform.

More on this project can be found on our Discord channels.

Thanks for reading and sharing this.