Capex is Killing Web Data Projects

An Elastic Web Data Approach by DataBoutique.com

About Data Boutique

Data Boutique is a web-scraped data marketplace.

If you’re looking for web data, there is a high chance someone is already collecting it. Data Boutique makes it easier to buy web data from them.

Join our Discord channels to learn and interact about this project:

The Cost of Web Data Projects

It is hard to evaluate the cost of a web data project, as it requires tracking resources down to each website, but we’ll try to lay out a broad framework.

Simply put, we have the following classes:

Labor costs: web-scraper coding and QA hours of employees or freelancers. These can be in-house or outsourced; Usually, they are split into a set-up and a maintenance phase.

Infrastructure costs: databases, servers, bandwidth. Sometimes these can be outsourced when in a so-called “managed model”

External services: Mainly proxy networks, unblockers, or similar.

The dollar weight of each of those varies greatly depending on the single website, according to:

website size (number of items to collect/ pages to crawl)

website structure changes over time

website anti-bot protection

The Opymas research of 2022 estimates that 75% of the costs are internal, but our market sentiment tells us that proxy networks are gaining share of wallet quite rapidly.

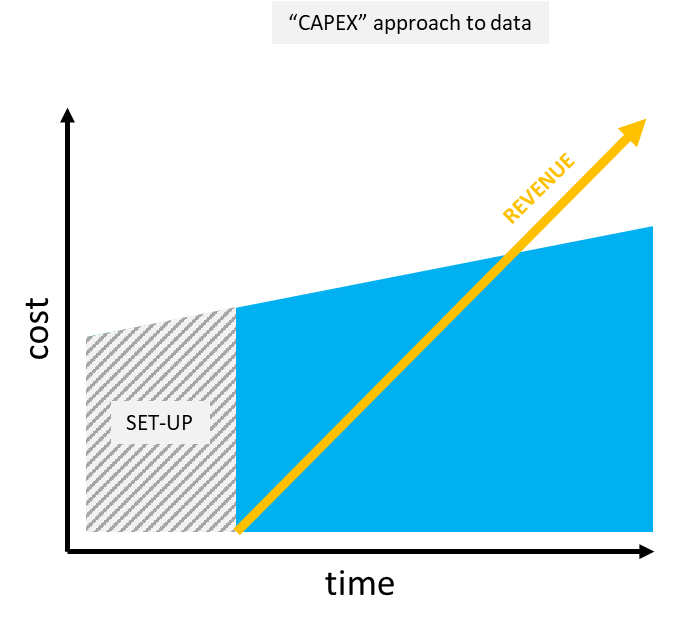

Capex, set-up times, and fixed costs

The initial investment is mainly on labor costs for scrapers and QA process. It is responsible for the length of set-up time, together with the availability of your staff.

Variable external costs start instead when the project is in production.

However, even if external costs are variable, the project suffers from “inelasticity” to changes: Variable costs become de-facto fixed costs because it’s hard to remove them.

In fact:

When deciding to scrape a website, we need to define upfront if we will scrape it just once a month, weekly, or daily. This decision will impact the entire set-up investment, and we don’t want to change the idea later and find out we need to reinvest more or find out we are not utilizing it (both cases imply we will rebuild everything we did in the set-up time).

Once we decide something, it’s likely to stay fixed in stone, as it’s painful to change.

Consequently, only projects set in stone get a “go” (no one likes throwing Capex in the garbage can). In other words, Capex is killing new PoC/demo/MVP/next feature ideas.

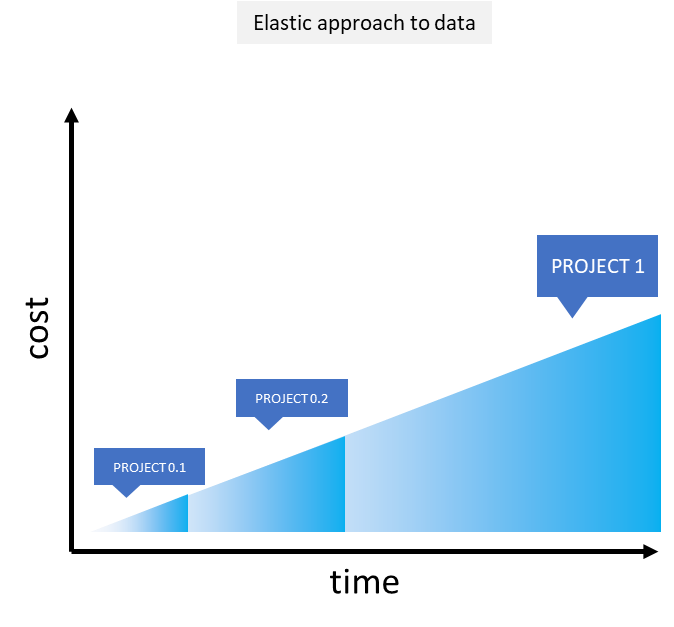

Elastic approach to data

We created Data Boutique precisely for this: Make every web-data project economically viable, and unlock new value opportunities in Internet-gathered information.

From day one, we aimed to make data access Elastic: No Capex, no commitments, and fully scalable.

Quoting the Data-driven VC post of April 27th, this is the effect of an elastic business model in the industry:

The primary effect that AWS had was on the initial fundraising by startups rather than on the cost of running the business at scale — effectively allowing startups to shift their large capital investments to later stages when uncertainty had been resolved. (Ewens, Nanda, and Rhodes-Kropf, 2018)

Variabilize everything

Ok, cool. But what does elastic mean?

Fractionable

On Data Boutique, you have no commitments on duration. You can fraction your purchase to even just one file. You are not committed to buying more.

Example: Do you need web data from H&M e-commerce to see if it fits your PoC or project? Click, download, and done. No set-up activity, very low marginal cost.

Scalable

On Data Boutique, you can increase the frequency of delivery or add new websites to it and grow your scope as large as you need.

Example:

Does H&M data look suitable for your project? Make it weekly, or daily. Add data from 10 European countries, the US, and China. Add Zara, Uniqlo, Bershka, Primark, Mango, Boohoo, Forever21, Shein, FashionNOVA, OVS, and Benetton.

Click, confirm, and done. Went too far? Scale it down with the same ease. No commitments.

Modular

On Data Boutique you can stack data together like LEGO bricks. Data structures are compatible and can be added one on top of another. All Product-List-Page data have the same number, order, type, and domain controls of fields. The same applies to other data structures.

H&M fits with Zara, that fits with Uniqlo, and so on.

Instant

When a dataset is LIVE, the access to data is immediate, in full self-service.

No long-term deals, minimum purchase limits, bundles, “contact-sales” or anything. Click, confirm, and done.

There has to be no friction between the user and the access to its data. Be it a business consultant at 3AM working on the market-research slide of the M&A presentation, or be it a startup founder on weekends and on a budget.

New Use Cases

When we understand the Elastic model, a whole new set of use cases arises.

In an upfront investment scenario (the CAPEX case), there is a lag of time before we can see the results (AKA “the engineers are busy right now, they’ll handle it next week”).

In the Elastic scenario, not only does the set-up time drop to zero, but we can do partial deliveries to be released earlier on the roadmap. We can use a leaner approach, build incrementally, stop, test, and then re-start.

The upside: Your project can be live a lot sooner. In an ideal situation, you buy just one tiny piece of data, build your PoC, show your clients, and when they agree, buy the rest of the data just when you start collecting revenues.

This brings the break-even point earlier in the pipeline and lowers your risk exposure.

Thinking Further

The flexibility of this model allows us to think outside of the box and deliver new, flexible stuff:

One-off projects, like a standalone study.

Faster Proofs-of-Concept (PoC) or MVPs, with restricted scope but still fully functional, at a very low cost of data. A PoC that can be refreshed with little cost, only if needed.

Use a mixed approach: Keep your scraping in-house but add extra redundancy (download it from Data Boutique only when your scrape fails), or use Data Boutique as a QA benchmark, having only once per month delivery.

Opportunities are countless and limited only by our creativity. Email us with your thoughts about this.

Join the Project

Data Boutique is a community for sustainable, ethical, high-quality web data exchanges. You can browse the current catalog and add your request if a website is not listed. Saving datasets to your interest list will allow sellers to correctly size the demand for datasets and onboard the platform.

More on this project can be found on our Discord channels.

Thanks for reading and sharing this.